Definition of AI Voice Generators

Imagine having a robot that can talk just like a human. AI Voice Generators are special programs that can create speech from text.

This means you can type something, and the computer will read it out loud in a voice that sounds almost like a real person.

These voices can be used in many different ways, from virtual assistants like Siri and Alexa to automated customer service calls.

Importance and Relevance in Today’s World

Think about how many times you hear a computer-generated voice every day. AI Voice Generators are very important because they help make our interactions with technology easier and more natural. Here are some reasons why they are important:

- Accessibility: Imagine if you couldn’t see or had trouble reading. AI Voice Generators can read out loud for you, making it easier to access information. This is very helpful for people with disabilities.

- Convenience: When you ask your phone for directions or to play a song, AI Voice Generators respond quickly, making your life more convenient. They save time and make it easier to multitask.

- Customer Service: Imagine calling a company and having to wait on hold forever. AI Voice Generators can help answer common questions quickly, providing better and faster customer service.

- Education: Think about learning a new language. AI Voice Generators can help by pronouncing words correctly and providing interactive lessons. This makes learning more engaging and effective.

How AI Voice Generators Work?

Imagine baking a cake. You need ingredients, a recipe, and some tools. AI Voice Generators also need ingredients (data), a recipe (algorithms), and tools (software and hardware). Here’s how they work:

- Data Collection: First, the AI needs to learn how people talk. Scientists collect a lot of recordings of human speech. This is like gathering all the ingredients for a cake.

- Training the AI: Next, the AI is trained to understand how to convert text into speech. This involves using machine learning algorithms. Think of this as following a recipe to mix the ingredients together. The AI learns patterns in the speech data, such as how words are pronounced and how sentences flow.

- Creating the Voice: The trained AI then uses what it has learned to create a digital voice. This is like baking the cake and getting it ready to eat. The AI can now take any text you give it and turn it into spoken words.

- Synthesizing Speech: When you type something for the AI to read, it processes the text, breaks it down into sounds, and then uses its digital voice to speak those sounds. This is like slicing the cake and serving it to you.

References

- Definition and Working of AI Voice Generators: AI Voice Generators

- Importance and Relevance: Importance of AI Voice Generators

- How AI Voice Generators Work: How AI Voice Generators Work

By understanding AI Voice Generators, we can appreciate how they make our interactions with technology more natural and helpful. These amazing tools are shaping our world and making it more accessible and efficient!

Overview of the Technology Behind AI Voice Generation

Imagine if you could teach your toy robot to talk like you. AI voice generation is the technology that makes this possible. It involves converting written text into spoken words using computers. This technology relies on several key components:

- Text-to-Speech (TTS) Systems: These systems take written text and turn it into speech. They have two main parts: the front end and the back end. The front end processes the text, breaking it down into sounds, while the back end converts these sounds into speech.

- Speech Synthesis: This is the process of generating human-like speech. Early methods used pre-recorded sounds from human voices, but modern systems use complex algorithms to create more natural and expressive speech.

- Natural Language Processing (NLP): NLP helps the computer understand the context and meaning of the text. It ensures that the speech sounds natural and makes sense.

The Role of Machine Learning and Neural Networks

Imagine you’re learning to play a musical instrument. At first, you need to practice a lot and learn from your mistakes. AI voice generators learn in a similar way using machine learning and neural networks.

- Machine Learning: This is like teaching a computer to learn from data. For AI voice generation, the computer is fed lots of recordings of human speech. It learns how to mimic these recordings by finding patterns in the data. This process is similar to practicing your instrument by listening to and imitating songs.

- Neural Networks: These are special algorithms inspired by the way our brains work. Think of neural networks as layers of connected nodes, like neurons in your brain, that process information. In AI voice generation, neural networks analyze speech data and learn how to produce natural-sounding voices.

For example, DeepMind’s WaveNet uses neural networks to generate speech. It analyzes waveforms (visual representations of sound waves) to create very realistic and expressive voices. This is like a musician who has mastered their instrument and can play beautiful music.

History of AI Voice Generators

Imagine taking a time machine back to when computers first learned to talk. The journey of AI voice generators started a long time ago and has seen many exciting developments:

- Early Beginnings (1950s-1970s): The first speech synthesis systems were very basic. In 1961, an IBM computer called the IBM 704 sang “Daisy Bell” in a robotic voice. This was one of the earliest examples of a computer generating speech.

- Development and Improvement (1980s-2000s): During this time, TTS systems improved a lot. They started using concatenative synthesis, which means joining together small snippets of recorded speech to form complete sentences. This made the speech sound more natural, but it was still limited by the quality of the recordings.

- Modern Advances (2010s-Present): The biggest leaps in AI voice generation happened with the advent of machine learning and neural networks. Technologies like WaveNet by DeepMind and Tacotron by Google have created voices that are almost indistinguishable from human speech. These systems can produce highly expressive and natural-sounding speech by learning from vast amounts of data.

References

- Technology Overview: Text-to-Speech Technology

- Machine Learning and Neural Networks: NCBI

- History of AI Voice Generators: History of Speech Synthesis

By understanding the technology, machine learning, neural networks, and the history of AI voice generation, we can appreciate how far this amazing technology has come and how it continues to evolve, making our interactions with machines more natural and human-like!

Early Developments in Voice Synthesis

Imagine building a simple toy that can say a few words. The early developments in voice synthesis were like that: basic but groundbreaking. Here are some key moments:

- The Voder (1939): Imagine a machine at a big fair that can mimic human speech. The Voder was one of the first devices that could produce human-like sounds. It was introduced at the 1939 New York World’s Fair and operated by an expert using a keyboard and foot pedals to produce sounds that resembled speech.

- Bell Labs (1961): Think of a computer singing a song. Bell Labs created a computer called the IBM 704, which sang “Daisy Bell.” This was a major step forward and showed that computers could be programmed to generate speech sounds.

- Linear Predictive Coding (1970s): Imagine using a formula to predict what sound comes next. Linear Predictive Coding (LPC) was a technique developed to analyze and synthesize the human voice more efficiently. It allowed computers to create more natural-sounding speech by predicting speech waveforms.

Key Milestones in AI Voice Technology

Think of the journey of AI voice technology as a series of exciting discoveries:

- Concatenative Synthesis (1980s-1990s): Imagine cutting and pasting pieces of recorded speech to create new sentences. This technique, called concatenative synthesis, was used to improve the naturalness of computer-generated speech. It involved splicing together small segments of recorded speech.

- Festival Speech Synthesis System (1990s): Think of a tool that lets you create different voices. The Festival Speech Synthesis System, developed at the University of Edinburgh, was a flexible platform that allowed researchers to experiment with different speech synthesis techniques and create custom voices.

- HMM-Based Synthesis (2000s): Imagine teaching a computer to speak by learning patterns. Hidden Markov Models (HMM) were used to model speech patterns statistically. This approach improved the smoothness and naturalness of synthetic speech.

- WaveNet by DeepMind (2016): Think of a robot learning to talk by listening to human speech. WaveNet, developed by DeepMind, used deep neural networks to generate speech waveforms from scratch. It produced highly realistic and expressive speech by learning from vast amounts of data.

- Tacotron by Google (2017): Imagine a system that can read any text and turn it into speech naturally. Tacotron used neural networks to convert text directly into speech waveforms, making the speech sound more fluid and natural.

Types of AI Voice Generators

Think of different kinds of AI voice generators like various musical instruments, each with its own way of producing sound:

- Concatenative Synthesis: Imagine using building blocks to create a wall. Concatenative synthesis works by joining together small snippets of recorded speech. Each snippet represents a tiny part of speech, like a syllable or phoneme. When these snippets are combined, they form complete words and sentences. This method sounds natural but can be limited by the number of available recordings.

- Formant Synthesis: Think of a machine creating sounds based on rules. Formant synthesis uses mathematical models to simulate the human vocal tract. It doesn’t rely on recordings but instead generates speech sounds based on acoustic parameters. This method is flexible but can sound robotic.

- Statistical Parametric Synthesis: Imagine using statistics to predict what comes next. This method uses models like Hidden Markov Models (HMM) to generate speech. It analyzes speech patterns and creates a smooth, natural-sounding voice by predicting speech waveforms statistically.

- Neural Network-Based Synthesis: Think of a computer learning to talk by listening to lots of conversations. Neural network-based synthesis, like WaveNet and Tacotron, uses deep learning to generate speech. These systems analyze large amounts of speech data and learn to produce highly realistic and expressive voices by understanding complex patterns in the data.

References

- Early Developments in Voice Synthesis: History of Speech Synthesis

- Key Milestones: Key Milestones in AI Voice Technology

- Types of AI Voice Generators: Types of Speech Synthesis

By exploring the early developments, key milestones, and different types of AI voice generators, we can see how this amazing technology has evolved to make our interactions with machines more natural and human-like!

Text-to-Speech (TTS) Systems

Imagine you want to hear your favorite book read out loud by a computer. Text-to-speech (TTS) systems are like magic storytellers that can turn written text into spoken words. Here’s how they work and why they’re important:

- How TTS Works: TTS systems first analyze the text you give them. They break it down into smaller parts like words and sentences. Then, they convert these parts into sounds using speech synthesis techniques. Finally, they combine these sounds to create a speech that you can hear.

- Uses of TTS: TTS systems are used in many places. For example, they help people with visual impairments by reading out loud what’s on their screens. They are also used in virtual assistants like Siri and Alexa, which talk to you and answer your questions.

- Examples: Popular TTS systems include Google’s Text-to-Speech, Amazon Polly, Magic Light AI and Apple’s VoiceOver.

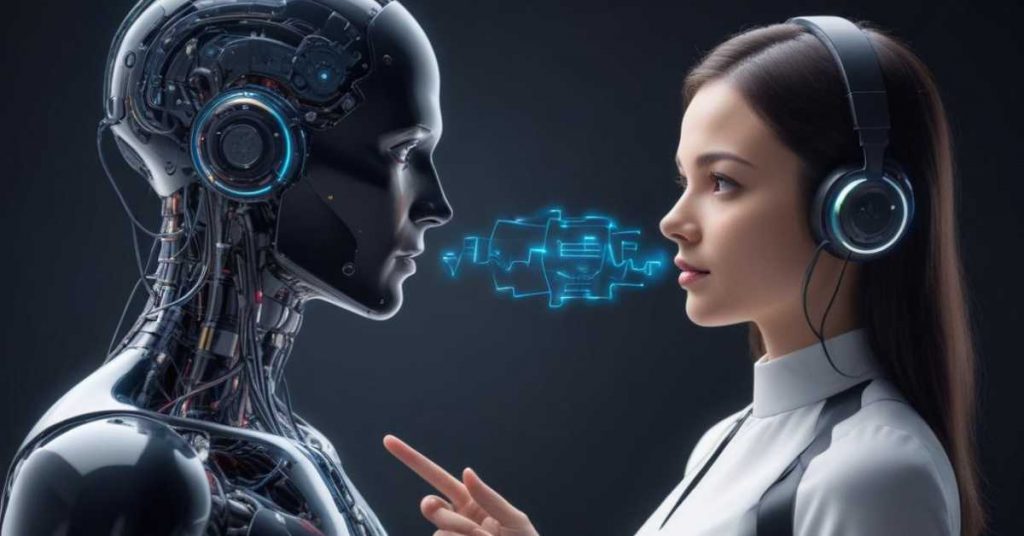

Speech-to-Speech (STS) Systems

Imagine you speak in English, and your friend only understands Spanish. Speech-to-Speech (STS) systems are like super translators that can convert your spoken words into another language’s spoken words instantly.

- How STS Works: STS systems first use automatic speech recognition (ASR) to understand and convert your speech into text. Next, they use machine translation to translate the text into the target language. Finally, they use TTS technology to convert the translated text back into speech.

- Uses of STS: These systems are very useful for travelers, allowing them to communicate with people who speak different languages. They are also used in international business meetings and customer service to help people understand each other.

- Examples: Popular STS systems include Google Translate’s voice feature and Microsoft Translator.

Voice Cloning and Synthesis

Imagine having a robot that can talk exactly like you. Voice cloning and synthesis are technologies that can create a digital copy of someone’s voice. This means the computer can generate speech that sounds just like a specific person.

- How Voice Cloning Works: To clone a voice, the system needs samples of the person’s speech. It analyzes these samples to understand the unique characteristics of the voice, like pitch, tone, and accent. Then, it uses this information to create a digital model of the voice.

- Uses of Voice Cloning: This technology is used in entertainment to create realistic voices for characters in movies and video games. It’s also used to help people who have lost their voices due to illness by creating synthetic voices that sound like their natural ones.

- Examples: Companies like Lyrebird and Respeecher offer voice cloning services.

Key Features of Modern AI Voice Generators

Modern AI voice generators are like super-smart storytellers and translators with lots of amazing features. Here are some key features that make them so powerful and useful:

- Natural Sounding Voices: Modern AI voice generators use advanced neural networks to produce voices that sound very natural and human-like. This makes interactions with technology feel more personal and engaging.

- Customization: These systems allow users to customize the voice’s pitch, speed, and accent. This means you can create a voice that matches your preferences or brand’s identity.

- Multilingual Support: AI voice generators can speak multiple languages fluently. This is great for creating content that can reach a global audience or for providing customer support in different languages.

- Emotion and Expressiveness: Modern systems can add emotions and expressiveness to speech. They can make the voice sound happy, sad, excited, or serious, depending on the context. This makes the speech more engaging and relatable.

- Real-Time Processing: Some AI voice generators can convert text to speech in real time. This is useful for live applications, like virtual assistants and real-time translations.

References

- Text-to-Speech Systems: TTS Overview

- Speech-to-Speech Systems: STS Overview

- Voice Cloning: Voice Cloning Technology

- Key Features: Modern AI Voice Features

By understanding TTS, STS, voice cloning, and the key features of modern AI voice generators, we can see how these technologies are making our interactions with machines more natural and helpful every day!

Naturalness and Fluidity of Speech

Imagine listening to a robot that talks just like a human. Modern AI voice generators are designed to make speech sound natural and fluid. Here’s how they achieve this:

- Neural Networks: These are like brainy machines that learn from lots of data. AI voice generators use neural networks to understand the patterns of human speech. This helps them produce voices that sound smooth and realistic, just like how people talk.

- Context Understanding: Think of how you change your tone when you’re excited or sad. AI systems can understand the context of the text and adjust the voice accordingly. This means the voice can sound happy, serious, or even surprised, making it more engaging.

- Pauses and Intonation: Imagine reading a story out loud. You pause at commas and raise your voice at the end of a question. AI voice generators use these natural speech patterns to make the voice sound more human-like.

Customization Options

Imagine being able to design your own robot’s voice. AI voice generators offer many customization options to fit your needs:

- Voice Pitch and Speed: You can change how high or low the voice sounds (pitch) and how fast or slow it speaks (speed). This helps create the perfect voice for different characters or applications.

- Accent and Language: Imagine having a robot that can speak with an American, British, or even Australian accent. AI voice generators can be customized to speak in different accents and dialects, making them more relatable to different audiences.

- Emotional Tone: Think of how a storyteller changes their voice to match the story. AI voice generators can be adjusted to sound happy, sad, excited, or calm, depending on the situation. This makes the speech more expressive and engaging.

Multi-Language Support

Imagine a robot that can speak any language you want. Modern AI voice generators can support multiple languages, making them useful for many purposes:

- Global Communication: Whether you need to communicate with someone in another country or create content for a global audience, multi-language support ensures your message can be understood by people who speak different languages.

- Language Learning: Think of learning a new language with a tutor who can speak it perfectly. AI voice generators can help by providing correct pronunciations and conversational practice in various languages.

- Accessibility: Imagine someone who cannot read but needs information in their native language. AI voice generators can read out text in multiple languages, making information accessible to more people.

Popular AI Voice Generator Tools

Imagine having a toolbox full of amazing gadgets. Here are some popular AI voice generator tools that you can use:

- Google Text-to-Speech: This is like a magic wand that turns text into natural-sounding speech. It supports multiple languages and accents, and you can use it on Android devices or integrate it into apps.

- Amazon Polly: Think of Amazon Polly as a storyteller with many voices. It offers lifelike speech and supports a wide range of languages. You can customize the voice’s pitch, and speed, and even add emotions to the speech.

- IBM Watson Text to Speech: Imagine a super-smart assistant that can read out text with great accuracy. IBM Watson’s TTS service provides high-quality speech synthesis and can be used in various applications, from virtual assistants to customer service.

- Microsoft Azure Cognitive Services: This tool is like a versatile craftsman. It offers customizable TTS services with natural-sounding voices, supports multiple languages, and provides easy integration with other Microsoft services.

- Voice Dream Reader: Think of a friendly robot that can read anything you want. Voice Dream Reader is an app that converts text into speech, making it easier for people with reading difficulties to access information.

References

- Naturalness of AI Speech: AI Speech Naturalness

- Customization Options: Voice Customization

- Multi-Language Support: Multi-Language AI

- Popular Tools: AI Voice Tools

By exploring the naturalness and fluidity of speech, customization options, multi-language support, and popular AI voice generator tools, we can see how these technologies are making our interactions with machines more seamless and personalized every day!

Overview of Leading AI Voice Generation Tools

Imagine having a magic kit full of amazing tools that can make computers talk just like humans. Here are some of the best AI voice generation tools and what makes each one special:

- Google Text-to-Speech

- Amazon Polly

- IBM Watson Text-to-Speech

- Microsoft Azure Cognitive Services

- Voice Dream Reader

Features and Benefits of Each Tool

1. Google Text-to-Speech

- Features: Google Text-to-Speech can read out text in many different languages and accents. It’s available on Android devices and can be integrated into various applications.

- Benefits: This tool makes apps more accessible by providing spoken feedback. It’s great for navigation apps, virtual assistants, and reading eBooks out loud.

2. Amazon Polly

- Features: Amazon Polly offers lifelike speech in many languages. It uses advanced deep learning technologies to create natural-sounding voices. You can customize the speech with different tones and speeds.

- Benefits: Polly is perfect for creating engaging customer interactions, such as chatbots and automated call centers. It can also convert large volumes of text into speech, making it useful for eLearning and news reading apps.

3. IBM Watson Text to Speech

- Features: IBM Watson provides high-quality speech synthesis with accurate pronunciations and intonations. It supports multiple languages and dialects.

- Benefits: This tool is ideal for creating conversational interfaces, virtual assistants, and enhancing accessibility for users with visual impairments. It’s also used in customer service applications to provide real-time responses.

4. Microsoft Azure Cognitive Services

- Features: Microsoft Azure offers customizable text-to-speech services with a wide range of natural-sounding voices. It supports multiple languages and can be easily integrated with other Microsoft services.

- Benefits: This tool is great for creating immersive user experiences in games, apps, and interactive media. It’s also used in business applications for automating customer support and generating audio content.

5. Voice Dream Reader

- Features: Voice Dream Reader is an app that converts text into speech. It supports many languages and provides various customization options, like adjusting the voice’s speed and tone.

- Benefits: This tool is particularly helpful for people with reading difficulties, such as dyslexia. It makes reading accessible by allowing users to listen to books, articles, and documents.

- Access over 230 diverse AI avatars representing various ethnicities and genders.

- Create custom avatars—your digital twin—by recording yourself, enabling personalized video content.

- Eliminates production bottlenecks: no actors, cameras, or studios needed

- NYPost reports users saving hours per video and creators earning ~$20K–60K monthly using Synthesia-enabled automation.

6. Synthesia AI

Applications of AI Voice Generators

Imagine how cool it would be if your toys, apps, and gadgets could all talk to you. AI voice generators are used in many exciting ways:

1. Virtual Assistants

- Example: Siri, Alexa, and Google Assistant use AI voice generators to interact with users. They can answer questions, set reminders, and control smart home devices.

2. Customer Service

- Example: Automated call centers use AI voices to handle customer inquiries. This helps businesses provide 24/7 support without needing human operators all the time.

3. Education and eLearning

- Example: eLearning platforms use AI voices to read out lessons and materials. This makes learning more interactive and accessible, especially for people who prefer listening over reading.

4. Accessibility

- Example: Screen readers use AI voices to help visually impaired users navigate websites and apps. They read out text on the screen, making digital content accessible to everyone.

5. Entertainment

- Example: Video games and animated movies use AI voices for characters. This adds depth to the characters and makes the stories more engaging.

6. Translation Services

- Example: AI voice generators in translation apps can convert spoken words from one language to another in real time. This helps people communicate across different languages easily.

References

- Google Text-to-Speech: Google TTS

- Amazon Polly: Amazon Polly

- IBM Watson Text to Speech: IBM Watson

- Microsoft Azure Cognitive Services: Microsoft Azure

- Voice Dream Reader: Voice Dream Reader

By understanding these leading AI voice generation tools, their features and benefits, and the various applications, we can appreciate how they enhance our interactions with technology and make our lives easier and more fun!

AI Voice Generators

In Entertainment and Media

Imagine watching your favorite cartoon and hearing a robot voice that sounds just like a human. AI voice generators are used a lot in entertainment and media to make experiences more fun and engaging:

- Animated Movies and TV Shows: Think about animated characters that talk and sing. AI voice generators can create voices for these characters, making them sound lively and realistic. This technology helps in creating diverse voices without needing multiple actors.

- Video Games: Imagine playing a video game where every character has a unique voice. AI voice generators are used to give voices to game characters, adding more depth and immersion to the gaming experience.

- Audiobooks and Podcasts: Think of listening to a book or a story read out loud by a computer that sounds just like a real person. AI voice generators can produce high-quality audiobooks and podcasts, making stories come to life.

In Customer Service and Support

Imagine calling a company and getting help from a robot that talks just like a human. AI voice generators are very useful in customer service and support:

- Automated Call Centers: When you call customer service, sometimes a computer answers first. AI voice generators can handle basic questions and direct you to the right department, reducing wait times and improving efficiency.

- Chatbots: Imagine chatting with a robot online that can also talk to you. AI voice generators make chatbots more interactive by giving them the ability to speak. This helps in providing quick and clear answers to customer queries.

- Virtual Assistants: Think about using an app to book a flight or check your bank balance, and the app talks to you. Virtual assistants like Siri, Alexa, and Google Assistant use AI voice generators to help you with everyday tasks, making interactions more personal and convenient.

In Accessibility Tools

Imagine not being able to see and having a computer read everything out loud to you. AI voice generators play a crucial role in accessibility tools, making technology more inclusive:

- Screen Readers: For people who are blind or have low vision, screen readers read out text displayed on the screen. AI voice generators make these readings sound natural and easy to understand, helping users navigate the internet and use apps.

- Voice-Controlled Devices: Imagine controlling your computer or smartphone using just your voice. AI voice generators are used in voice-controlled devices to provide feedback and confirm actions, making it easier for people with physical disabilities to use technology.

- Educational Tools: Think about students with learning disabilities who struggle with reading. AI voice generators can read out textbooks and learning materials, making education more accessible and helping students learn more effectively.

Benefits of AI Voice Generators

Imagine a magical tool that makes technology easier and more fun to use. AI voice generators have many benefits:

- Improved Accessibility: AI voice generators help people with disabilities by providing spoken feedback and allowing voice control of devices. This makes technology accessible to everyone, regardless of their physical abilities.

- Enhanced User Experience: Think about how nice it is to hear a friendly voice. AI voice generators make interactions with technology more natural and engaging, whether you’re talking to a virtual assistant, listening to an audiobook, or playing a video game.

- Cost-Effective: Imagine needing many different voices for a project but not having to hire multiple actors. AI voice generators can create various voices quickly and at a lower cost, making them a cost-effective solution for businesses and creators.

- Consistency: AI voice generators provide consistent and clear speech, which is especially important in customer service and support. This ensures that customers receive the same high-quality experience every time they interact with the system.

- Multilingual Support: AI voice generators can speak many languages, helping businesses reach a global audience. This is useful in customer service, education, and entertainment, where it’s important to communicate effectively with people from different linguistic backgrounds.

References

- AI in Customer Service: Customer Service AI

- AI in Accessibility: Accessibility and AI

- Benefits of AI Voice Generators: Benefits of AI Voice

By understanding how AI voice generators are used in entertainment and media, customer service and support, and accessibility tools, we can see how they improve our interactions with technology and make the world a more inclusive and engaging place.

AI Voice Generators

Cost and Time Efficiency

Imagine having a magic helper that can save you a lot of time and money. AI voice generators are like that magic helper, providing several benefits in terms of cost and time efficiency:

- Automating Tasks: Think about customer service calls. Instead of hiring many people to answer the phones, companies can use AI voice generators to handle simple queries. This saves money because one AI system can do the work of many people, and it can work 24/7 without getting tired.

- Creating Content Quickly: Imagine needing a narrator for a new audiobook. Hiring a human narrator takes time and can be expensive. With AI voice generators, you can create high-quality voice recordings quickly and at a lower cost. This is especially useful for businesses that need to produce a lot of content fast.

- Scaling Efforts: Picture a company expanding its services to new countries. Instead of hiring new staff who speak different languages, they can use AI voice generators that support multiple languages. This allows the company to serve more customers without significantly increasing costs.

Enhancing User Experience

Imagine using a device that feels like it understands and responds to you just like a friend. AI voice generators can enhance user experiences in many ways:

- Natural Interactions: Think of a virtual assistant like Siri or Alexa. These AI voice generators use natural, human-like speech, making interactions more pleasant and engaging. This naturalness helps users feel more comfortable and satisfied with the technology.

- Personalization: Imagine a voice assistant that knows your name and remembers your preferences. AI voice generators can be customized to use specific voices, tones, and even accents that users prefer. This makes the experience feel more personal and tailored to individual needs.

- Instant Feedback: Think about asking a question and getting an immediate response. AI voice generators provide instant verbal feedback, which is faster than typing and reading. This quick response time enhances the overall user experience, making it more efficient and enjoyable.

Accessibility and Inclusivity

Imagine a world where everyone, regardless of their abilities, can use technology easily. AI voice generators play a crucial role in making this possible:

- Helping the Visually Impaired: Imagine you cannot see the text on a screen. AI voice generators can read out loud what’s written, helping visually impaired people navigate the internet, read emails, and access information.

- Assisting with Learning Disabilities: Think about students who struggle with reading. AI voice generators can read textbooks and learning materials out loud, helping these students learn more effectively.

- Enabling Voice Control: Imagine controlling your smartphone or computer using just your voice. AI voice generators make voice commands more natural and responsive, allowing people with physical disabilities to interact with technology without needing to use their hands.

Challenges and Limitations

While AI voice generators are amazing, they also face some challenges and limitations:

- Accuracy and Pronunciation: Imagine a computer mispronouncing your name or a word you know well. AI voice generators sometimes struggle with accurately pronouncing names, technical terms, or words from different languages. This can lead to misunderstandings.

- Lack of Emotional Understanding: Think of a robot trying to comfort you but not really understanding your feelings. AI voice generators can mimic emotions, but they don’t truly understand context or feelings like humans do. This can make their responses feel less genuine in sensitive situations.

- Privacy Concerns: Imagine your conversations being stored somewhere you don’t know about. AI systems often need data to improve, which can raise privacy concerns. Users might worry about how their data is being used and whether their conversations are being recorded.

- Dependence on Technology: Think about relying too much on a tool and not knowing what to do if it fails. Over-reliance on AI voice generators can be problematic if the technology experiences issues or downtime. It’s important to have backup plans in place.

By understanding the cost and time efficiency, how AI voice generators enhance user experience, their role in accessibility and inclusivity, and the challenges and limitations they face, we can see how this technology improves our lives while also recognizing areas that need further development.

Ethical Concerns

Imagine if your robot friend said something mean or unfair. AI voice generators face ethical concerns, such as:

- Bias: Sometimes, AI can learn bad habits from the data it is trained on. If the data has biases, the AI might say things that are unfair or discriminatory.

- Privacy: AI systems often need data to get better, but this raises concerns about how personal information is used and stored. People might worry that their conversations are being recorded without their consent.

- Misinformation: AI voice generators can be used to spread false information or create fake audio clips that sound real. This can lead to people believing things that aren’t true.

Technical Limitations

Imagine a toy that sometimes doesn’t work right. AI voice generators have some technical limitations, like:

- Accuracy: AI might mispronounce words, especially names or technical terms, which can be confusing.

- Understanding Context: AI can mimic emotions but doesn’t truly understand feelings, making it less effective in sensitive situations.

- Dependence on Data: AI needs a lot of data to function well, and poor-quality data can lead to poor performance.

Potential Misuse

Imagine someone using your toy for bad purposes. AI voice generators can be misused in ways like:

- Creating Deepfakes: People can create fake audio recordings that sound real, which can be used to deceive others.

- Spam and Scams: AI can be used to make automated calls that trick people into giving away personal information.

- Impersonation: AI voices can mimic real people, leading to identity theft or other fraudulent activities.

Future of AI Voice Generators

Innovations on the Horizon

Think of your robot friend learning new tricks. Future innovations in AI voice generators include:

- Better Naturalness: AI voices will become even more human-like, with improved intonation and emotional expression.

- Multilingual Support: AI will be able to switch between languages more seamlessly, helping people communicate across language barriers.

- Real-Time Processing: AI will process speech faster, making real-time applications more efficient.

Potential Future Applications

Imagine all the new things your robot friend could do. Future applications of AI voice generators include:

Virtual Reality: AI voices can create more immersive experiences in virtual reality games and environments.

Personalized Learning: AI can tailor educational content to each student’s needs, providing personalized tutoring.

Healthcare Support: AI can assist doctors by providing real-time patient data and treatment suggestions.

AI Voice Generators in Different Industries

Healthcare

Imagine a doctor who can talk to you anytime. AI voice generators in healthcare can:

- Assist with Diagnosis: AI can help doctors by reading patient data and suggesting possible diagnoses.

- Provide Patient Support: AI can remind patients to take their medication or follow treatment plans.

Education

Think of a teacher who is always available to help. AI voice generators in education can:

- Offer Personalized Tutoring: AI can provide one-on-one help to students, explaining concepts in ways they understand.

- Enhance Accessibility: AI can read textbooks out loud for students with reading difficulties or visual impairments.

Marketing and Advertising

Imagine a friendly voice telling you about new products. AI voice generators in marketing and advertising can:

- Create Engaging Content: AI can produce personalized advertisements that speak directly to individual customers.

- Improve Customer Interaction: AI can answer customer queries and provide information about products and services.

Case Studies

Successful Implementations of AI Voice Generators

Imagine stories of robots doing great things. Here are some successful implementations:

- Google Duplex: Google’s AI can make phone calls to book appointments, sounding just like a human.

- Lyrebird: This company created AI voices for video game characters, making them sound more realistic.

Lessons Learned

Think about what we can learn from these successes:

- Quality Data is Key: High-quality data leads to better AI performance.

- Continuous Improvement: AI systems need regular updates to stay effective and accurate.

Tips for Choosing the Right AI Voice Generator

Key Factors to Consider

Imagine picking the best toy from a shelf. Here are key factors to consider:

- Naturalness of Voice: How human-like does the voice sound?

- Customization Options: Can you adjust the voice’s pitch, speed, and emotional tone?

- Language Support: Does it support the languages you need?

Comparing Different Tools

Think of comparing different toys to find the best one. Here’s a comparison:

- Google Text-to-Speech: Great for naturalness and language support.

- Amazon Polly: Offers extensive customization options.

- IBM Watson: Known for accuracy and reliability.

How to Get Started with AI Voice Generators

Step-by-Step Guide

Imagine setting up your new toy for the first time. Here’s a step-by-step guide:

- Choose a Tool: Decide which AI voice generator suits your needs.

- Sign Up and Set Up: Create an account and set up the tool on your device.

- Input Text: Enter the text you want to convert to speech.

- Customize: Adjust the voice settings to your preference.

- Generate Speech: Click to convert text to speech and listen to the result.

Best Practices

Think about how to get the most out of your toy:

- Use High-Quality Text: Ensure the text you input is clear and well-written.

- Test Different Voices: Experiment with different voices to find the best fit.

- Keep Improving: Regularly update and refine the AI settings for better performance.

Conclusion

Recap of Key Points

Imagine summarizing all the fun things you learned. Here’s a recap:

- AI voice generators: They turn text into speech, making interactions more natural.

- Uses and benefits: They enhance user experience, improve accessibility, and save time and money.

- Challenges: They face ethical concerns, technical limitations, and potential misuse.

The Future Impact of AI Voice Technology

Imagine what your robot friend might do next. AI voice technology will continue to evolve, becoming more advanced and integrated into daily life, making technology more accessible and engaging for everyone.

References

- AI Privacy Concerns

- Deepfake Concerns

- Comparing AI Tools

- Choosing AI Voice Generators

- Google Duplex

- AI in Marketing

FAQs

Common Questions and Answers About AI Voice Generators

What is an AI voice generator?

An AI voice generator converts written text into spoken words using artificial intelligence.

How do AI voice generators work?

They use neural networks and machine learning to analyze text and generate natural-sounding speech.

What are the benefits of AI voice generators?

They use neural networks and machine learning to analyze text and generate natural-sounding speech.

What are the benefits of AI voice generators?

They improve accessibility, enhance user experience, and save time and money.

Can AI voice generators support multiple languages?

Yes, many AI voice generators can speak multiple languages and accents.

Are AI voice generators expensive?

The cost varies, but they are generally cost-effective compared to hiring human voice actors.

What are the ethical concerns with AI voice generators?

Ethical concerns include privacy, bias, and potential misuse for creating deepfakes or spreading misinformation.

By understanding these aspects of AI voice generators, you can appreciate how they work and their impact on our lives, making technology more accessible and enjoyable for everyone!

Blood Sugar

11th Aug 2024Usually I do not read article on blogs however I would like to say that this writeup very compelled me to take a look at and do so Your writing taste has been amazed me Thanks quite nice post.

Paola Quinn

26th Mar 2025Great post! If you’re looking for reliable hosting for your blog, small business, or eCommerce site, I highly recommend Cloudways. It has truly made a difference in many projects! Feel free to check out the link